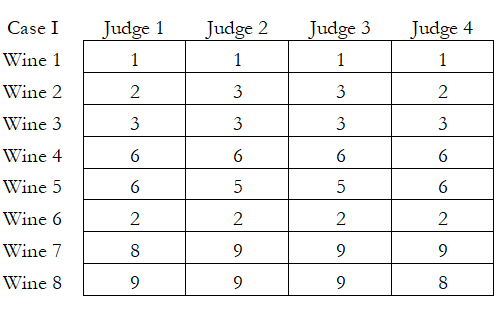

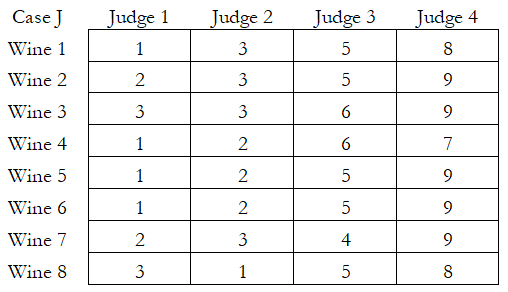

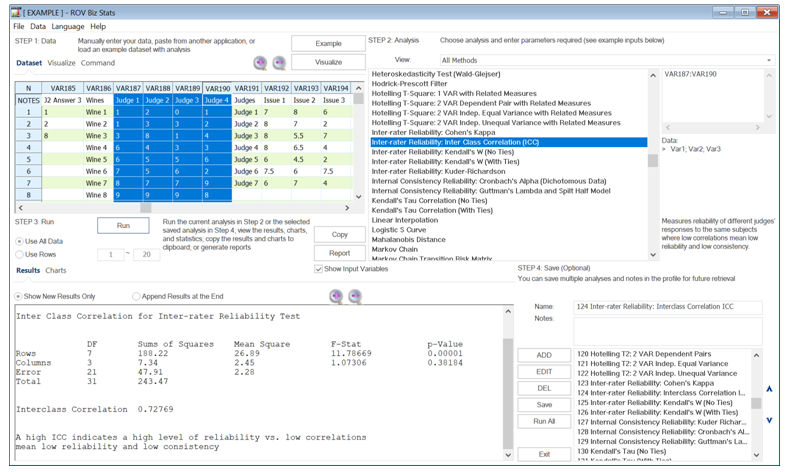

If we wish to test for both inter-rater and intra-rater reliability, we can use the Inter-Class Correlation (ICC) test. Cases I and J in the accompanying tables show some example data where we perform a double-blind test on eight wines (the wine bottles look identical, labels removed, and replaced with a generic label such as Wine 1, Wine 2, etc.). And suppose four sommeliers or expert wine judges were asked to grade the wines from a value of 1 (low quality) to 10 (high quality).

In Case I, we see that for each of the different wines, all four judges scored the wines consistently. For example, Wine 1 is by far the worst, whereas wines 7 and 8 are highly rated by all judges. This would indicate a high level of consistency and reliability in each row. The ICC test returns an Interclass Correlation = 0.9841, the row’s p-value = 0.0000, and the column’s p-value = 0.8538. This means there is a high level of consistency, as measured by the ICC, and we can reject the null hypothesis of having the same values in the rows and fail to reject regarding the columns. In other words, all the judges tend to be fairly consistent in their tastes (high ICC), possibly because they all have similar judging or sommelier training. In addition, the wines are different as compared to one another (p-value of 0.0000 for the rows), where we can say based on the scores, which we have now concluded are consistent and reliable, that the wines are certainly of varying quality. In contrast, when comparing the columns (i.e., comparing among the judges), we have consistency and statistically no difference in their ratings (high p-value of the columns at 0.8538), or, in other words, the judges have similar judgments.

Case J shows a very different situation. We see that Judge 1 is probably a snob, whereby no wine is considered good. Hence, Judge 1 is internally consistent with himself or has high intra-rater reliability. In contrast, Judge 4 simply loves wine and scores any and all wines highly. Judge 4 is also internally reliable to himself, but not to the other judges. The computed Interclass Correlation = 0.00149 (low inter-rater consistency and low reliability among the judges) with a row p-value of 0.3958 (we cannot reject the null hypothesis and state that the rows, when taken together, are statistically similar to each other, indicating, in this case, that there is high intra-rater reliability) and a column p-value of 0.0000 (we reject the null hypothesis and state that there is a statistically significant difference among the columns or judges, which means that there is no inter-rater consistency and no reliability in the wine scores).